A Simple Guide to Understanding Bias in AI & Large Language Models

First Published on 15 March 2023.

Why is should you read this post and why is it relevant?

In recent years, large language models have gained significant attention and popularity due to their remarkable capabilities in natural language processing, conversational AI, and generative tasks. However, alongside their advancements, concerns regarding bias in these models have also come to the forefront. In this blog post, we will delve into the issue of bias in large language models, exploring its causes, implications, and potential solutions.

The Nature of Bias:

Bias in large language models arises from several sources. Firstly, these models are trained on vast amounts of data collected from various sources, reflecting the biases present in society. This training data often carries inherent biases, including gender, racial, and cultural biases. Consequently, the language models tend to perpetuate and amplify these biases, leading to biased predictions and responses.

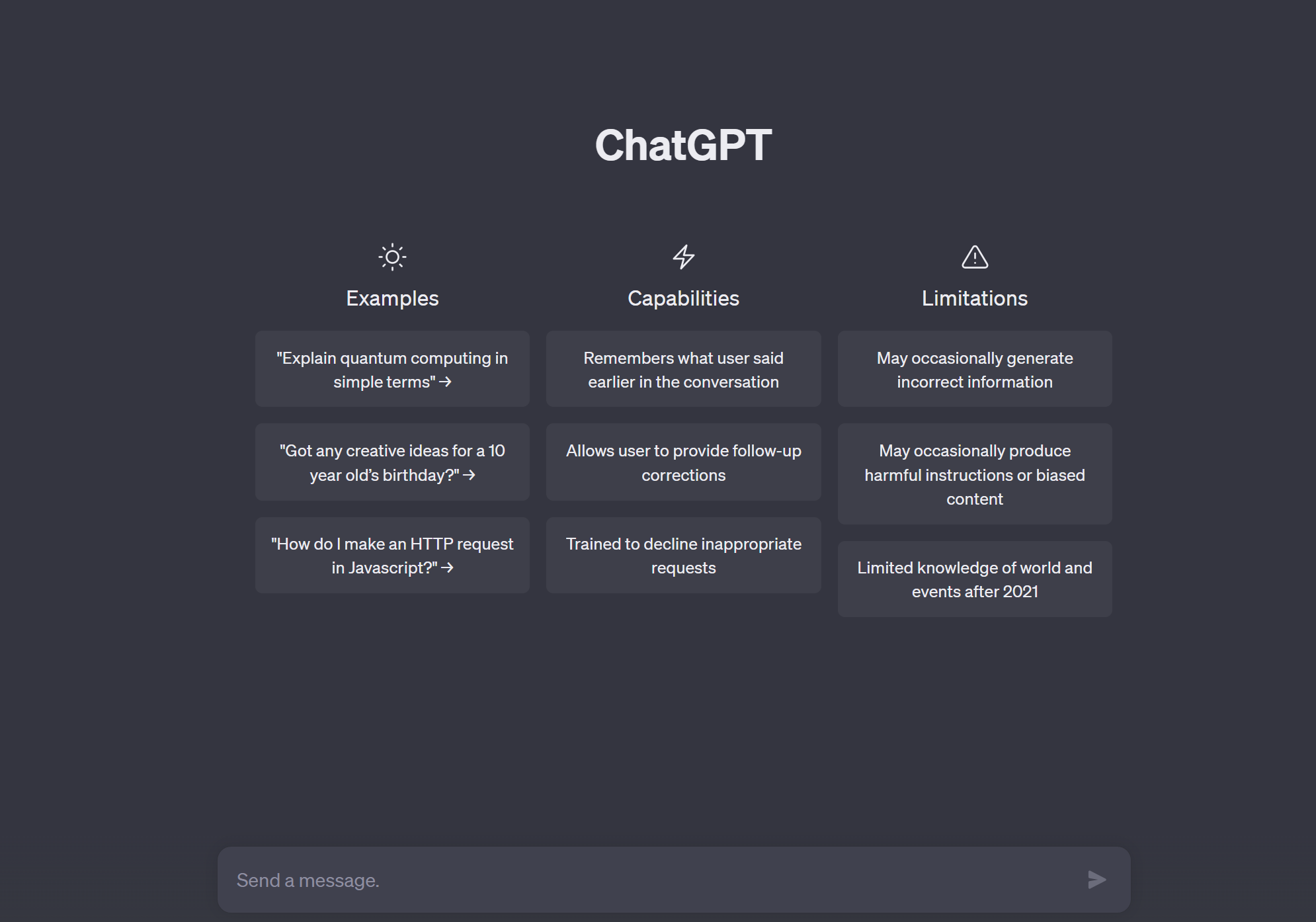

However, aside from this “black box” of convolution, let’s not forget about the humans behind these models. We humans have our own biases, whether we like it or not. When we’re involved in training these language models, we might unknowingly inject our own biases into the mix. It’s like accidentally seasoning the soup with a little too much salt. These biases can seep into the model’s decision-making, understanding, and even its ability to generate responses. In the case of OpenAI’s ChatGPT, such biases trickle in, almost unnoticed through human reinforcement, a critical process to guide the chatbot’s learning process.

The Impact of Bias and How We can Address Bias:

The ramifications of bias in large language models can be far-reaching and potentially harmful. Biased predictions and responses can reinforce stereotypes, perpetuate discrimination, and amplify social inequalities. For instance, AI systems have been found to exhibit inaccuracies in recognizing faces of dark-skinned individuals, demonstrate gender-based credit card limit disparities, and make incorrect predictions regarding future criminal activities based on race.

Lets dive into some ambitious and helpful solutions:

- The Proactive Approach: So, it is widely acknowledged that addressing bias from the beginning of the model development process is more effective and cost-efficient than attempting to remove it later. This approach dictates that businesses and organizations should adopt a proactive solution by implementing systems, processes, and tools specifically designed to identify and mitigate bias during the training and deployment stages.

- The Diverse and Representative Training Data: To tackle biases arising from skewed or incomplete training datasets, it is crucial to ensure the inclusion of diverse and representative data. Efforts should be made to eliminate underrepresented groups’ marginalization and improve the fairness and inclusivity of the training data.

- The Ethical Frameworks and Governance: Establishing ethical frameworks and governance mechanisms can provide guidelines for responsible and unbiased AI usage. These frameworks should emphasize transparency, accountability, and the continuous monitoring and evaluation of AI systems for bias detection and mitigation.

- Logic-Aware Language Models: Research, such as the work conducted by MIT’s CSAIL, demonstrates the potential of logic-aware language models to reduce harmful stereotypes. By incorporating logic into the model training process, these models exhibit significantly lower bias without the need for additional data editing or training algorithms.

- Enhanced Tools for Bias Assessment: Developing advanced tools and techniques for tracking, assessing, and measuring bias in language models is crucial. Startups are emerging to address this need, offering AI management solutions that can assist in identifying and mitigating bias more effectively.

Moving Forward:

Bias in large language models poses significant challenges that require careful attention and proactive measures from organizations and developers. By understanding the causes and implications of bias, as well as adopting solutions such as diverse training data, ethical frameworks, logic-aware models, and enhanced assessment tools, we can take significant strides towards building more fair, inclusive, and unbiased AI systems. Only time will tell how such models may evolve in their scope and impartiality.

editor's pick

news via inbox

Nulla turp dis cursus. Integer liberos euismod pretium faucibua