Navigating the Uncertain Terrain of ChatGPT Plug-Ins: A Need for Security

Published on 7 October 2023.

Introduction:

In the span of eight months, ChatGPT, the brainchild of OpenAI, has captivated millions with its uncanny ability to generate a diverse array of text, from captivating stories to intricate code. Yet, the allure of its versatility comes with a caveat: ChatGPT’s expansion into plug-ins, which promise enhanced functionality, raises significant concerns about user privacy and data security.

Challenges and Response:

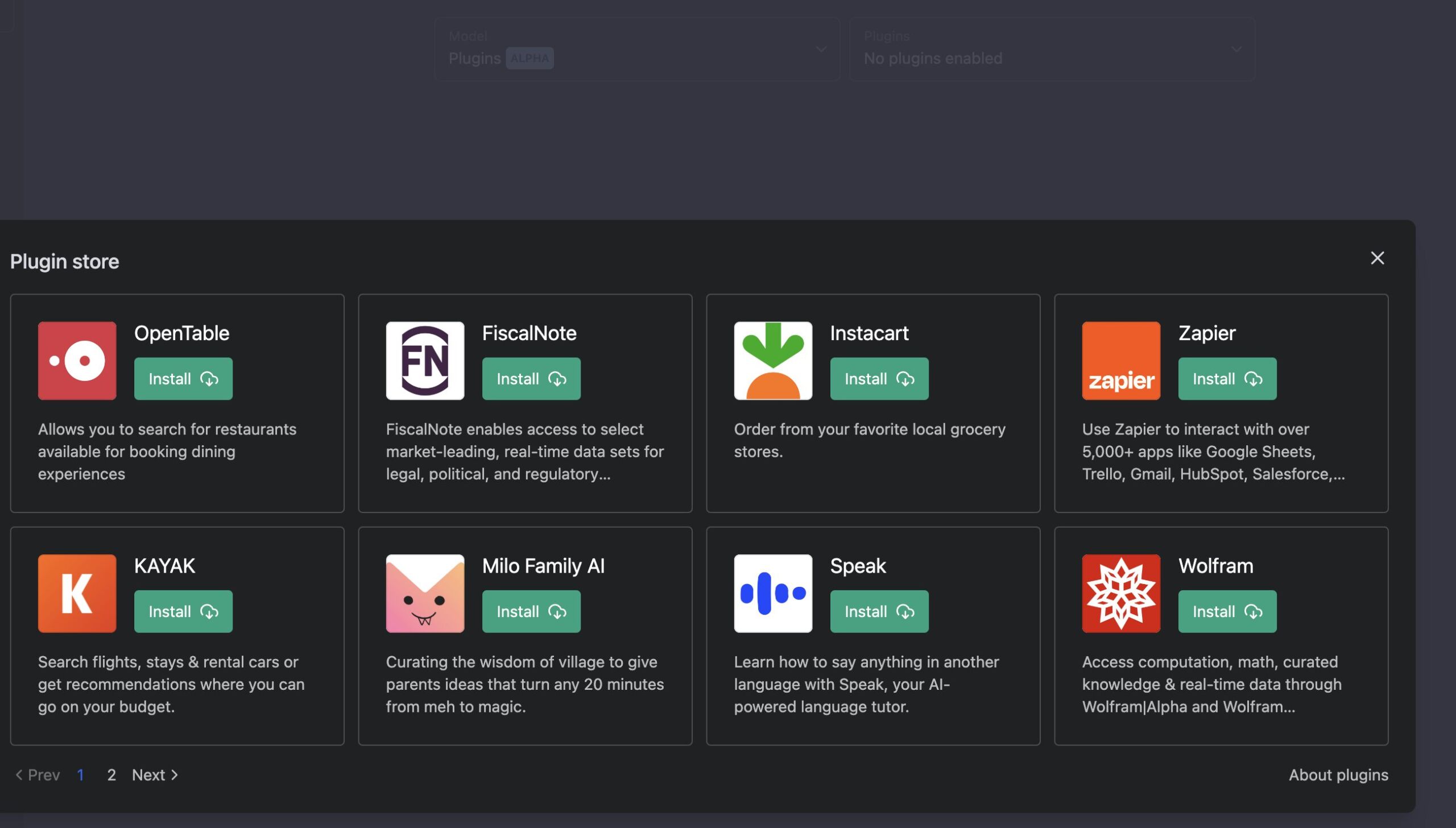

At its core, ChatGPT operates by responding to user prompts, drawing from a pool of data collected from the internet up until September 2021. The introduction of plug-ins in March aimed to augment its capabilities, allowing tasks like flight bookings and website analysis. However, as the plug-in ecosystem expands, security researchers like Johann Rehberger have highlighted potential vulnerabilities that could compromise user data. These issues include the misuse of OAuth, which could lead to data theft and even remote code execution.

OpenAI acknowledges these challenges and is working diligently to address potential exploits. Despite their efforts, concerns persist. Plug-ins, though revolutionary, lack robust security measures, potentially exposing users to risks like prompt injection attacks and data theft. OpenAI’s guidance for plug-in developers includes content guidelines and requires a manifest file, but crucial details about developers and data usage are often obscured.

Microsoft, a key investor in OpenAI, plans to adopt similar plug-in standards for its products. However, the industry-wide rush to harness the power of Large Language Models (LLMs) has outpaced comprehensive security frameworks. The Open Worldwide Application Security Project (OWASP) has identified ten major security threats in LLMs, with prompt injection attacks and plug-in vulnerabilities topping the list.

Takeaways:

In light of these challenges, users must exercise caution when engaging with public LLMs. The allure of seamless integration and expanded capabilities must be balanced with a keen awareness of potential risks. As the technology evolves, it is imperative for both developers and users to prioritize security, demanding transparency, and stringent safeguards from platform providers. The transformative potential of LLMs and their plug-ins is vast, but a secure digital future hinges on responsible innovation and vigilance against emerging threats.

editor's pick

news via inbox

Nulla turp dis cursus. Integer liberos euismod pretium faucibua