Navigating the Regulation of Artificial Intelligence: Who Will Take the Lead?

First Published 7 June 2023.

Context:

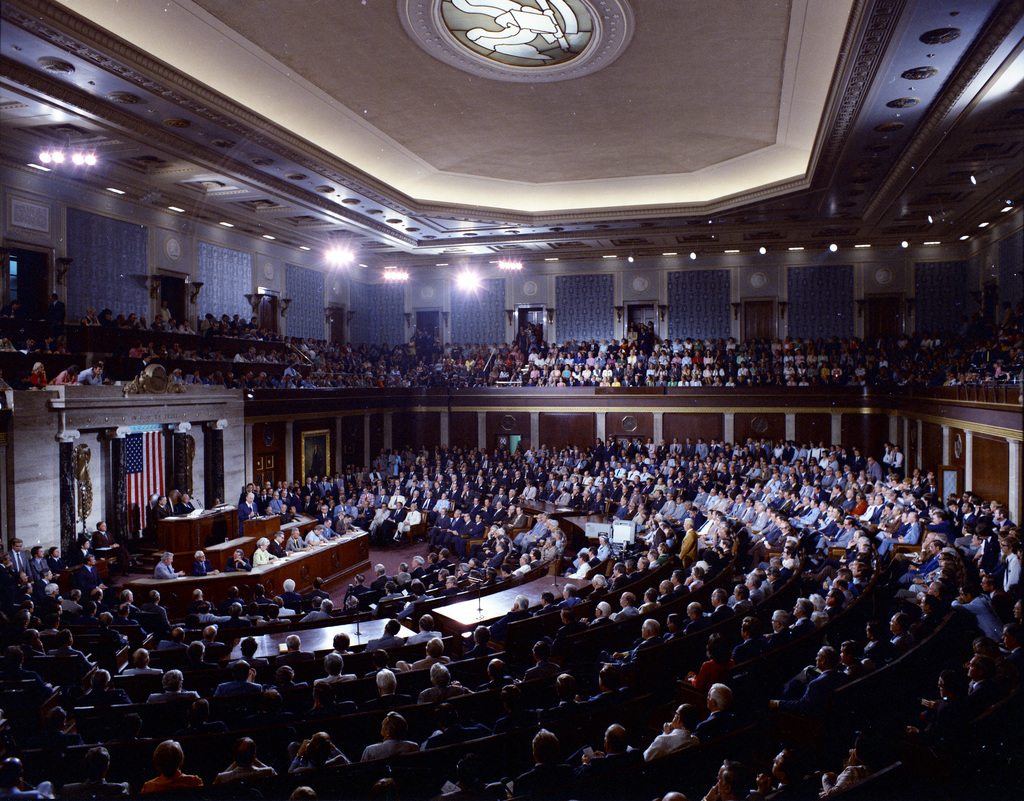

The rapid advancement of artificial intelligence (AI) technology has raised concerns about its potential impact on society. As AI systems become more powerful, there is a growing need for regulation to address the risks and ensure responsible use. In a recent testimony before Congress, Sam Altman, the CEO of OpenAI, emphasized the importance of setting limits on powerful AI systems. This article explores the current debates surrounding AI regulation and discusses the potential role of different stakeholders in shaping its future.

The Need for Regulation:

Concerns about its transformative effects on various aspects of life have gained prominence. OpenAI’s Sam Altman’s acknowledgment of people’s anxiety reflects the need to address potential risks associated with AI. Altman and other developers recognize that if AI technology goes wrong, it can have significant adverse consequences. Government oversight and regulation are essential for mitigating risks and ensuring responsible development and use of AI systems.

So what actions have been taken? (1) Senate Majority Leader Chuck Schumer has called for preemptive legislation to establish regulatory “guardrails” on AI products and services. However, the vagueness of the proposal raises questions about its effectiveness. (2) Various federal agencies within the Biden Administration are looking to implement an “AI Bill of Rights,” focusing on ensuring safe, non-discriminatory, and privacy-respecting AI systems. However, clear definitions and specific guidelines are yet to be established. (3) The National Telecommunications and Information Administration (NTIA) has initiated an inquiry into the usefulness of audits and certifications for AI systems. This specific inquiry shows a step in the right direction for effective regulation. Meanwhile, state-level initiatives have begun and reflect the diverse approaches to AI regulation across the United States. (4) The European Union has taken a proactive approach to AI regulation, passing substantial new legislation such as the AI Act. Its regulations focus on risk assessment, pre-approval, licensing, and substantial fines for violations. The US should ramp up legislation to keep up with similar international efforts.

Challenges:

Many proposed regulations lack specificity, with hypothetical harms falling into existing categories such as misinformation and intellectual property infringement. Clear definitions and guidelines are necessary to effectively address the unique challenges posed by AI systems. Proposed regulations often require additional legal authority and face political challenges. The current fragmented legal landscape and the slow pace of lawmaking limit the immediate impact of regulations on AI development. Governments historically struggle to attract the technical expertise necessary for defining and addressing the potential harms of AI systems. Collaboration with industry experts and the establishment of regulatory bodies with relevant expertise can help bridge this gap. In conclusion, regulating AI poses challenges stemming from ambiguity in regulations, slow-paced lawmaking, and the global nature of AI. Overcoming these challenges requires clear definitions and international cooperation to establish consistent regulatory standards.

editor's pick

news via inbox

Nulla turp dis cursus. Integer liberos euismod pretium faucibua