Regulating ChatGPT and other Large Generative AI Models

First Published on 11 August 2023.

Literature Review of “Regulating ChatGPT and other Large Generative AI Models”

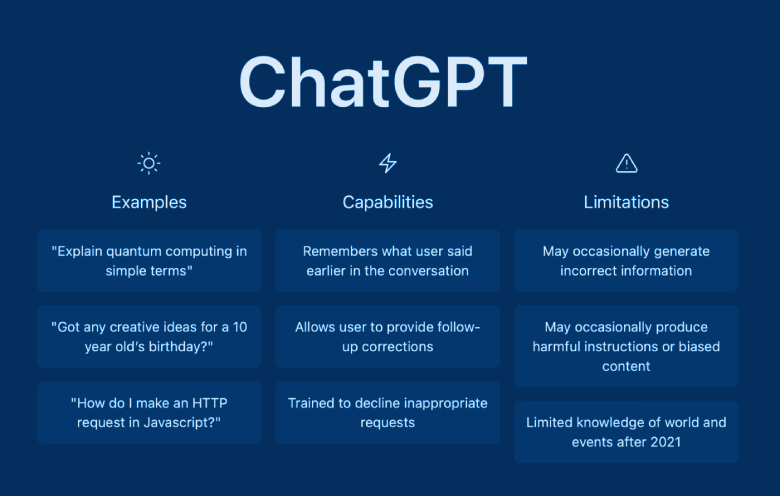

This paper presents policy proposals for regulating Large Generative AI Models (LGAIMs), with a focus on models like ChatGPT. The authors argue that technology-specific regulations can quickly become outdated due to the rapid pace of AI innovation, urging for more technology-neutral approaches. They advocate for a three-layer regulatory framework: the first layer involves existing technology-neutral regulations like the GDPR and non-discrimination provisions. The second layer identifies concrete high-risk applications, rather than the pre-trained models themselves, as the subject of high-risk obligations. The third layer necessitates collaboration between actors in the AI value chain for compliance purposes. Specific policy recommendations include detailed transparency obligations for developers, deployers, and users, as well as expanding content moderation rules to cover LGAIMs, incorporating notice and action mechanisms, trusted flaggers, and comprehensive audits for large LGAIM developers. The paper emphasizes the urgency of updating regulations to keep pace with the dynamic development of AI models like GPT-4 and to ensure a level playing field for future AI technologies.

In summary, the paper highlights the need for nuanced and adaptable regulation for LGAIMs, addressing both technological specifics and broader concerns like privacy, non-discrimination, and content moderation. The authors propose a three-layer regulatory approach to address high-risk applications, encourage cooperation among AI actors, and provide clear guidelines for transparency and content regulation. By incorporating these policy recommendations, regulators can strive to strike a balance between fostering AI innovation and safeguarding societal interests in the face of rapidly evolving AI technologies.

editor's pick

news via inbox

Nulla turp dis cursus. Integer liberos euismod pretium faucibua