The Ethical Dilemma of Predictive Policing: Can AI Make Fair Decisions?

First Published on 9 September 2023.

Introduction

In an era of rapid technological advancement, artificial intelligence (AI) is playing an increasingly significant role in our lives, from influencing hiring decisions to determining credit scores. One of the most critical areas where AI is making its presence felt is in law enforcement through the concept of predictive policing. The idea behind predictive policing is to use algorithms to identify potential crime hotspots and patterns. While this technology promises to make policing more efficient and effective, it raises important questions about fairness, bias, and accountability.

The Problem of Bias in Predictive Policing

The notion that AI can make decisions devoid of human biases is increasingly being challenged. A prominent example that highlighted this issue was the case of COMPAS, an AI system used by judges to assess the likelihood of a convicted criminal committing future crimes. A 2016 investigation by ProPublica found that COMPAS appeared to be biased against minorities, raising questions about the fairness of its decisions. This revelation sparked a broader debate about the trustworthiness of AI algorithms in the criminal justice system.

CrimeScan and Predictive Policing

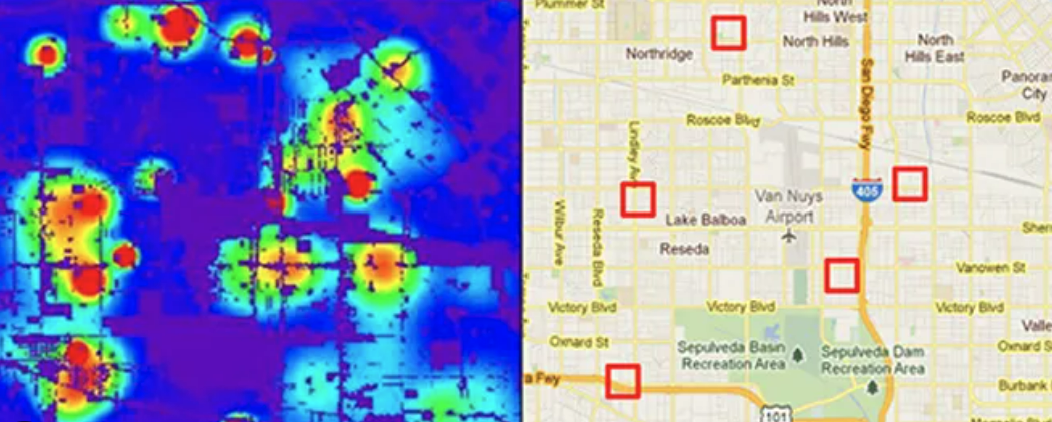

CrimeScan, developed by computer scientists Daniel Neill and Will Gorr at Carnegie Mellon University, is one of the AI tools designed for predictive policing. Their approach is based on the idea that violent crime can be compared to a communicable disease, often breaking out in geographic clusters. CrimeScan utilizes a wide range of data, including reports of minor crimes, 911 calls, and seasonal trends, to predict where crime hotspots may emerge. The intention is to detect sparks before they turn into fires, thereby preventing more severe crimes.

PredPol, another program, has been used by over 60 police departments across the United States to identify areas where serious crimes are more likely to occur. While the company claims that its software is twice as accurate as human analysts in predicting crime locations, these results have yet to be independently verified.

The Controversy Surrounding Predictive Policing

Predictive policing has faced criticism from organizations like the American Civil Liberties Union (ACLU), the Brennan Center for Justice, and various civil rights groups. They argue that historical data from police practices can introduce bias into the algorithms, potentially reinforcing stereotypes about “bad” and “good” neighborhoods. Predictive policing systems that rely heavily on arrest data are particularly susceptible to this bias.

Furthermore, the potential for bias in predictive policing is not the only concern. There is also a risk that the anticipation of crimes in certain neighborhoods may lead to more aggressive policing, affecting the relationship between officers and the communities they serve.

The Challenge of Accountability

One of the significant challenges in the use of AI in law enforcement is the lack of transparency and accountability. Many of these AI systems are proprietary, making it difficult for the public to understand how they function. As machine learning becomes more complex, even the engineers who create these systems may struggle to explain their decision-making processes, resulting in what is known as “black box” algorithms.

The AI Now Institute recommends that public agencies responsible for criminal justice, healthcare, welfare, and education refrain from using black box AI systems. They argue that legal and ethical considerations are often overlooked during the development of these systems.

Conclusion

In the end, it is crucial to remember that AI should support human decision-making, not replace it entirely. Efforts are underway to promote fairness and accountability in the use of AI in law enforcement. The Center for Democracy & Technology has created a “digital decisions” tool to help engineers and computer scientists create algorithms that produce unbiased results. They encourage careful consideration of data representation, potential exclusions, and unintended consequences. The AI Now Institute advocates for “algorithmic impact assessments,” requiring public agencies to disclose the AI systems they use and allowing outside researchers to analyze them for potential problems. This transparency is essential for building trust between law enforcement agencies and their communities. See [link] for more.

editor's pick

news via inbox

Nulla turp dis cursus. Integer liberos euismod pretium faucibua